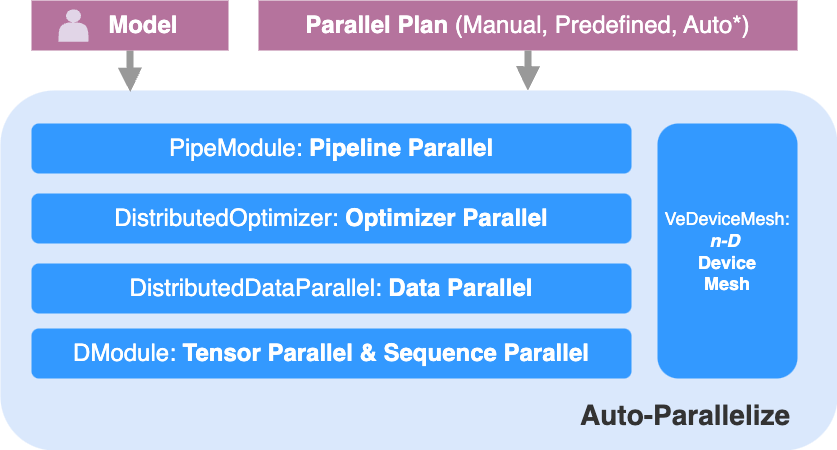

veScale Parallel Overview

The overview of veScale n-D parallelism is as follows:

(* is under development)

The Auto-Parallelize block takes the untouched Model from the user and Parallel Plan (given by manual effort, prefined for each model type, or automatically generated from Auto-Plan*) and then parallelizes the single-device model into nD Parallelism across a mesh of devices.

veScale's nD Parallelism follows a decoupled design where each D of parallelism is handled by an independent sub-block (e.g., DModule only handles Tensor & Sequence Parallel, without coupling with other Parallel). In contrast to the conventional coupled design that intertwines all parallelism together, such a decoupled nD Parallelism enjoys composability, debuggability, explainability, and extensibility, all of which are of great value for hyper-scale training in production.

4D Parallelisim API

Our 4D parallelism (Tensor, Sequence, Data, and ZeRO2) is as follows:

More examples can be found in: <repo>/examples/.

5D Parallelisim API

Coming Soon